Working with Thicket to evaluate the impact of 2016’s Flourishing Fellowship

We are excited to share our newly released report into the 2016 Lifehack Flourishing Fellowship evaluation by Thicket.

This is a co-written blog post by Lifehack & Thicket, providing some background and context to the report, and our learnings from working together.

Lifehack on meeting Thicket

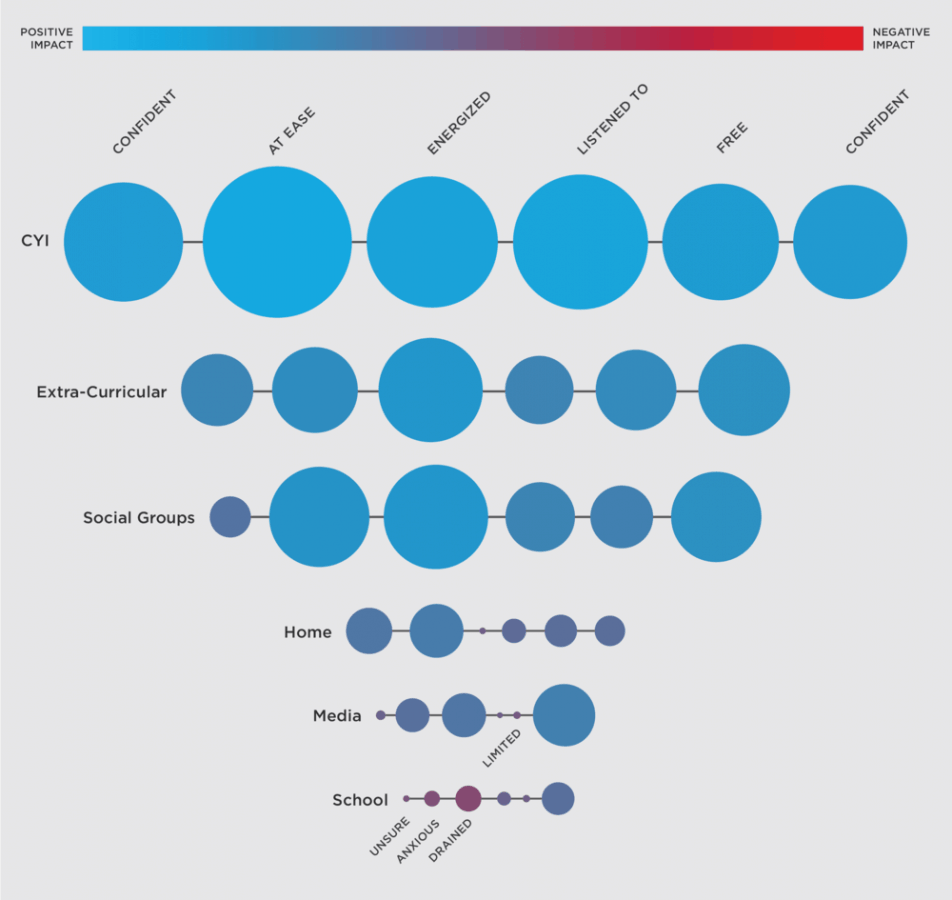

Thicket Labs specialise in ways to quantitatively measure the benefits of complex social change efforts, taking an participatory design and systems methodology approach to impact evaluations. We first came across Thicket when we discovered their public report assessing the nuanced social impact of Chinatown Youth Initiatives. It spoke to us, as it tried to bring across the impact of a programme, and mapped out the participants’ personal experience in relation to the objectives of the initiative itself as well as the wider environment required to make positive change happen for the participants themselves.

Sample graphic from the Chinatown Youth Initiative evaluation

At Lifehack HQ we had been radically upskilling our knowledge in the area of evaluation—but we were no trained evaluators. Whilst having gotten ad-hoc support from our funders in regards to evaluating larger programmes, we were keen to find ways in which we (the team ourselves) could find out whether our programmes were making an impact in people’s lives. It took us from investigating the Most Significant Change technique for Lifehack Labs, to using the idea of capitals to work out the multifaceted changes our programmes might be invoking. Saying whether the programme was successful or not felt tricky when our overall approach was experimental—and learning was often the outcome, not just for the team but also our programme participants. Thinking about the six categories of social, human, financial, digital/physical, wellbeing and intellectual capital (based on some of Zaid Hassan’s practice from the Social Labs world) allowed us to assess our programmes in a more nuanced way.

What the capitals still didn’t answer was the question of longer-term impact and behaviour change. Did our programmes actually result in people lastingly changing their behaviours, as opposed to one-off changes (which is nice, but not really good enough when it comes to seeking collective cohort-level shifts)? Did people take their new-found knowledge and connections to take their work in youth wellbeing and ambitions further? And more importantly, if we’re seeking to intervene at a systems level, how do we know whether we’re on track? You can read more about our broader journey to understanding impact here.

If we don’t manage to answer these questions then how do we know we’re on track with our overall mission of improving youth wellbeing in Aotearoa through building capability and capacity in those who work with young people?

—

Back in September 2015, Chelsea and Gina had the chance of meeting Deepthi, one of the driving forces behind Thicket Labs, in person in New York City when the Lifehack team was en route to present our work at IAYMH in Montreal. During our kōrero we heard more about the data-analysis competency of the team and the tech platforms ability to produce nuanced reports and outcomes. We were intrigued! So when the Fellowship came around for the second time we got in touch with Deepthi and the team to work out if and how we could make use of Thicket’s platform, tools and resources to assess whether or not the Fellowship was having an impact on improving youth wellbeing.

Thicket’s thoughts on meeting Lifehack:

When Thicket Labs launched in 2014, there weren’t a lot of groups out there taking a systems-change approach to their work, and even fewer that included a rigorous design thinking lens as well. It was after we released our impact report on our work with Chinatown Youth Initiatives that we started to make contact with more groups outside of New York with a similar philosophy and viewpoint to ours. When the Lifehack team reached out for an initial chat, our team was excited to see the parallels in our work.

When Chelsea and Gina made time to meet with me (Deepthi) on their trip through New York, I came away from our conversation struck by how rigorously they had committed to operating through a design-thinking mindset, and how unwaveringly they were keeping their eyes fixed on the goal of systems impact. At the time, I thought to myself that they had made massive progress from what they described as their initial starting point towards a more impactful model just by applying these tools. When the need for evaluation for the Flourishing Fellowship arose, our team jumped at the opportunity to work with Lifehack, particularly as we felt our co-design approach to evaluation was a perfect fit for their program.

Thicket’s thoughts on key learnings and challenges

Measuring behavior change among individuals and organizations is not new terrain for Thicket, and we’ve developed a quantitative method for this that collect what is sometimes referred to as “thick” data, or data that uncovers people’s emotions, stories, and models of their world. Working with Lifehack gave us the opportunity to push our methodology further in a co-design setting.

In our workshop, we asked Fellows to generate thick data on the systemic factors that they believed were the most influential, which we then used to evaluate them. By helping construct the evaluation criteria themselves, the Fellows would hold a mirror held up to their work and more rigorously reflect on a) whether their projects were truly focusing on the factors they believed would make the most impact on the ground and b) whether they were operationalizing what they learned through the fellowship.

Figure 1: The impact factors Thicket collected from the fellows as thick data, which were used to measure their work.

While most teams have the best of intentions about taking what they learn from professional development courses and applying them immediately in their jobs, instituting and sustaining operational changes within organizations can be the highest mountain to scale. We found that for the duration of the Flourishing Fellowship, the Fellows reported that their projects were consistently addressing more of their identified impact factors each time they were assessed. It was striking to see that while fellows were not fully focusing on what they believed was most impactful at the outset of the fellowship, they were collectively able to make operational changes towards a common vision through the program. To us, that signalled the success of the Flourishing Fellowship.

We also learned that some of the areas where fellows are most struggling to operationalize include addressing the problem of pervasive feelings of shame in youth, helping bolster opportunities to connect with their Māori identity, and providing resources that help improve cohesive relationships in community. These are BIG issues to tackle. We’re hoping to see innovative solutions emerge from a co-design process with youth to some of these issues.

How did this process help us? What have we been able to do as a result?

The Thicket process provided us with a range of ways to think about tracking and visualising programme engagement. For example:

A petri dish approach to visualising programme composition

It particularly helped us to create a link between the challenges and opportunities Fellows had identified in their communities and how the Fellowship was supporting them to address those. Specifically, we were able to map how participants had changed the focus or approach to their programmes and as a result of what they were learning in the Fellowship. A key part of this was the envision workshop Thicket ran, where Fellows mapped out the barriers, strategies and outcomes for youth wellbeing in their communities.

- Dougal shares a kōrero at the 2016 Fellowship

As noted in the report, these helped us build a bottom-up picture of the challenges our Fellows see in their communities, using their own language, as well as the strategies they believe are key to address those challenges. We believe there is significant potential to build on this activity in 2017, aligning it with what we learnt in the development of our Impact Model. We hope to repeat a similar exercise at scale across Aotearoa, and use it to highlight pinch points within the system that are not currently getting enough support or focus, as well as to demonstrate key common and important outcomes and how they are being achieved across Aotearoa.

Another one of the most crucial insights was learning who the programme was really useful for. The evaluation aligned with others findings that were coming through as we developed our Lifehack Impact Model, showing us that the programme was most useful to those who have the opportunity to apply their knowledge to youth wellbeing initiatives. The Thicket evaluation also showed that people who had found their Why when it comes to youth wellbeing were more engaged. As such, it has allowed us to further strengthen the programme focus and recruitment for this year’s Fellowship—and make it focused on supporting people who have the ability to apply their knowledge – who already have the capacity for influencing youth wellbeing within their communities.

What do we now know about evaluating programmes like this, and what are we doing next as a result?

It’s exciting for us to have worked with Lifehack to take our work in measuring behavior change among organizations and distill it into a new approach to sector-wide evaluation. By using workshops to work out our quantified thick data collection process, we’ve been able to identify and address some of the weaknesses of surveys in capturing meaningful relationships behind the data. In doing so, we’ve innovated on the way we capture data digitally, so that we can scale up our data collection to be both thick and big. By taking a natural language approach to our data collection interface, we circumvent the artificial environment of a survey to bring out a more meaningful statement from participants, while also building the structured dataset needed for quantitative analysis.

Another area where the Thicket team has pushed ourselves is in the realm of visualizing the data we collected. In the report, we tried a mix of approaches to visualizing our thick datasets—all of which have a compelling insight within them, but not all of which were fully realized. When it comes to repeating this process at a bigger scale, such as across the whole of Aotearoa, we’d not only be able to use technology to scale our data collection to be both thick and big, but we’ll also be using refined visualizations to display the data. We’re looking forward to that.

A preview of one of Thicket’s new tools for visualizing thick data; specifically, the relationships between collaborating partners.

—

Conclusion

Working together has shown us the need to evaluate social-impact programmes with nuance and rigour. The emergent methodologies for tracking impact across collective and social spaces, in particular using of qualitative data demonstrates what’s possible when human dedication and commitment to learning meet technological advances (and the systems/developer brains behind it).

Working with Thicket has been a great project when it comes to creating clarity around what we’re looking to learn—and what indicators might help us assess whether we’re on track. A big mihi (gratitude and thanks) to the Thicket team, in particular Deepthi and Nadia. Your openness to working on this with us across continents, oceans and time zones has been great. Your incessant drive to improving the output to the best it can be has been amazing.

We can only encourage to have a look at the report. There are some interactive areas to the report, which you can find by click on some of the images. It takes you to interactive areas like this one.